Blog / BIM & Construction Management

BIM is not Big Data

Categories

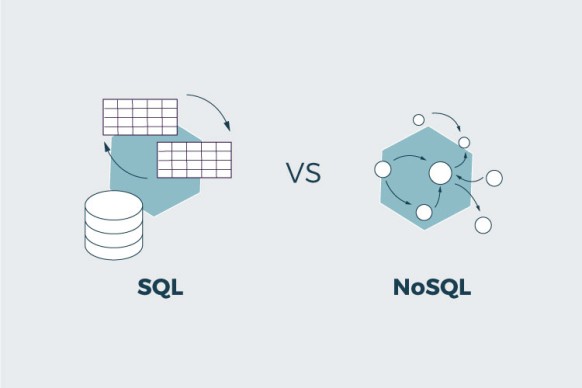

In the following article, Fernando Iglesias Gamella, Professor of the Master’s in Global BIM Management at ZIGURAT, in spanish, explains the relationship between BIM and Big Data and why -for the time being- both terms are not strictly interrelated. In this context, Fernando explains, sensorization and the Digital Twin are emerging as a way to eliminate the existing boundaries between the two concepts. Nowadays, the expression that we live in a world surrounded by data is not even a novelty. If we think about storing data, it seems logical to talk about databases. Well, for the vast majority of BIM users, databases are formed by the different "Data Sheets" that contain the information extracted from the models. Either stored in a spreadsheet (Excel), an Access library or even several .csv stored in a SQL database where queries can be made. In short, regardless of the volume and storage, data structured in rows and columns and relationships between each of these records. This way of classifying and storing data is nothing new. The biggest novelty is that the working tools now allow us to store a greater amount of data and extract them in a more agile way. But this way of storing data is not new at all. In fact, we have been working with this type of database since the genesis of this relational type of database was first brought to light by Edgar Codd back in the 1970s. Therefore, the reign of this type of database is now almost 50 years old.

A lot of data is not Big Data

So far it seems clear, however, data by the mere fact of existing, being generated and multiplying does not imply a contribution of value. Whatever its origin, it is important to know what to do with it. A BIM model is a data source like any other. However, just by the mere fact of its existence, data, however numerous it may be, is not labeled as Big Data. It is not about accumulating, but about extracting value. Processing data means converting it into useful information on which to elaborate analysis and, based on this analysis, applying learning that implicitly leads to optimization.

The "3Vs" of Big Data

Moreover, we could not consider storing data in these relational databases as Big Data technology. Being purists, the reason is their inability to comply with the "3 Vs" of Big Data. They are not capable of storing a large volume of variable data that mutates at high speed. It is in these 3Vs that Big Data reigns supreme. Although, the number of "Vs" grows at the same speed as the data. Naming, marketing, or spontaneous generation, makes people talk about five, eight or even ten "Vs". But for the time being, let's stick with three. Volume: What is big data? Just ask yourself, what happens on every minute on the Internet?

What Happens in an Internet Minute in 2022: LocaliQ

What Happens in an Internet Minute in 2022: LocaliQ

Just look at this graph to see what we mean by "BIG" data. Information being generated and multiplying exponentially. The analysis of this data can only be approached with Big Data tools. Data cannot be structured in the traditional way, i.e. with relational databases. This data is measured in petabytes. 1PB= 5.37 million 200MB BIM models. In variety, the data must be varied, and this means the ability to combine data of different formats (string, number, boolean...or text, video, audio, json, html, csv) structured, semi-structured and unstructured. In speed, the data loses value on the fly, it is updated because newer data appears.

NSQL databases

All Big Data databases are themselves NoSQL databases. An intangible term that seems to be the paradigm of ambiguity. Well, it is nothing more than databases that break the relational schema and therefore coexist very well with the "Vs" mentioned above. We do not think in tables, rows or columns but, in aggregated data of multiple natures. This is where the most common tools in this technology appear: Hadoop, MongoDB, DynamoDB (Amazon) or Cassandra (Facebook), BigTable (Google)... As we see each Big Five with its winning horse.

BIM = Big Data: how far are we?

It seems that at this point we have lost the scale and focus. A BIM model is not at that scale unless we have five million. What then about the discipline that addresses data cleansing, preparation and analysis? Isn't that what we do with the information housed in a BIM model when we want it to provide value in any of the lifecycle phases? Well, that's Data Science. Or, rather, data science on a small scale to extract value from an analysis of the data, manage the information with statistical tools to correct and detect errors. A high prediction rate helps to anticipate the right decision and therefore optimize processes. All this with powerful and user-friendly data visualization tools. This is nowadays a reality in our industry. The most common way to ensure traceability of elements between various software in a BIM process today is through GUID, UniqueID, IDs or classification systems. Extrapolating this way of working, would it be correct to control the traceability of all information that an individual generates by always using a common key, for example, the ID? It seems unthinkable. I insist again, for the moment the Volume is not big enough, and we can survive without the compass of GUIDs.

JSON, a new hope

However, there is life beyond the GUID. MongoDb works with JSON (JavaScript Object Notation), a text format for data exchange and a more than feasible entry point for the exchange of IFC models. JSON solves the problem of impedance (incompatibility between different types of data) implicit in relational databases. MongoDB allows us to work with collections, which are simply sequences of data of different types, where no restriction is placed on working with them. JSON allows us to store information in a more convenient way, regardless of the data stored. It is not necessary to decompose the information into individual values in the form of parameters stored in a relational database. Perhaps, after all of the above, we come to the conclusion that BIM models do not need to be part of big data technology. And, today, that is the case. We are far away. At the moment, at the level of data generation, it is a grain of sand in the desert compared to the data presented above. We can handle relational databases and take them to the limit of their possibilities. Perform complex queries, group information in summary or pivot tables, extract reports with colors and graphics (PowerBI/Tableu), but the frontier of change is closer than we think.

Sensorization and digital twins: opportunity to bring BIM and Big Data closer together.

How can we resemble a web page-like production of information? Sensorization. This will multiply the data generated. To the degree that we are able to generate Digital Twins that serve as a source of all that data captured by sensors, we will approach the frontier of Big Data and put in check the use of the relational model of databases. With sensors and the management of the information they produce through Digital Models, we enter the 3V data league. BIM is the gateway to connect the digital twin with the physical asset (whether in the design and construction phase or in the operation phase) with existing big data management technologies on a massive scale. But, if that connection is to be successful, it will always occur outside the BIM model. Understanding the model in its three-dimensional representation and supported by layers of information behind that, to manage them it would be essential to have Big Data technologies. BIM is not Big Data, yet. Author: Fernando Iglesias Gamella, Professor responsible for the Revit design module of the Master’s in Global BIM Management at ZIGURAT